Retrieval-Augmented Generation (RAG) has rapidly become the backbone of enterprise AI. However, deploying these systems without a rigorous evaluation framework is a high-risk gamble. Inconsistent or inaccurate RAG performance can directly undermine critical business objectives, erode customer trust, and create significant compliance risks.

This guide provides a comprehensive framework for enterprise leaders to implement robust RAG evaluation and benchmarking. We will cover the essential metrics, leading frameworks, and production-ready best practices required to ensure your RAG systems are reliable, accurate, and continuously improving in demanding enterprise environments.

Understanding RAG: The Foundation of Modern Enterprise AI

At its core, Retrieval-Augmented Generation (RAG) gives your AI models access to a real-time, curated knowledge base. Instead of relying solely on static training data, RAG enables an AI system to retrieve current, relevant information from your internal documents and databases before generating a response. This ensures answers are accurate, up-to-date, and grounded in your organization’s specific data.

Understanding RAG: The Foundation of Enterprise AI

Traditional RAG: The Librarian

1. Retrieve

2. Augment

3. Generate

Pulls current, relevant information from your business documents to provide accurate, up-to-date responses.

Agentic RAG: The Research Team

Think → Use Tools → Adapt

Functions like a team of intelligent assistants, using multiple tools and data sources to strategically tackle complex questions.

The next evolution, Agentic RAG, elevates this process. Where traditional RAG acts as a single librarian, an agentic system operates like a team of intelligent researchers. It can strategically decompose complex questions, utilize multiple tools and data sources, and adapt its retrieval strategy to ensure comprehensive and precise answers.

Enterprise Applications and the Business Case

- Customer Support: RAG powers instant, accurate answers from policy documents, reducing resolution times by over 28% at companies like LinkedIn.

- Knowledge Management: Employees can instantly find internal procedures and subject-matter expertise, breaking down information silos.

- Sales & Finance: Teams access real-time product information, competitive analysis, and market data for automated, accurate reporting.

- Legal & HR: Contract analysis, compliance verification, and employee policy questions are handled with speed and precision.

The business case is clear: RAG ensures AI systems operate with current data, provide contextually relevant answers, and automate high-effort information retrieval tasks.

Why RAG Benchmarking Is Mission-Critical

Deploying an unevaluated RAG system is a significant business risk. The consequences of poor performance directly impact your bottom line:

- Customer Trust Erosion: Incorrect information provided by an AI can damage brand credibility.

- Compliance Violations: In regulated industries like finance and healthcare, inaccurate AI outputs can lead to severe penalties.

- Operational Inefficiency: Employees will abandon tools they cannot trust, negating any potential productivity gains.

Industry data from 2024 shows that 50% of organizations cite evaluation as their second-greatest challenge in AI deployment. A seemingly manageable 5% hallucination rate in a pilot can compound into hundreds of daily errors in production, creating operational drag and reputational damage.

Why Benchmarking is Mission-Critical

50%

Of organizations cite evaluation as their #2 challenge in AI deployment.

Customer Trust Erosion

Incorrect AI-provided information can damage brand credibility and customer relationships.

Compliance Violations

Inaccurate outputs in regulated industries can lead to severe penalties.

A systematic benchmarking framework provides the foundation for performance optimization, risk mitigation, and continuous improvement, giving stakeholders confidence through transparent, measurable quality metrics.

Comprehensive RAG Evaluation Frameworks: A Ranked Guide

The RAG evaluation landscape has matured rapidly, with over 1,200 research papers published in 2024 alone. This reflects RAG’s shift from an experimental technology to mission-critical enterprise infrastructure.

Comprehensive RAG Evaluation Frameworks

Explore the landscape of evaluation tools, ranked by enterprise suitability.

Galileo AI

Highest Enterprise Adoption

Purpose-built for enterprise with comprehensive production monitoring and dedicated support.

LangSmith

Best Comprehensive Platform

Offers end-to-end evaluation workflow management, especially for those in the LangChain ecosystem.

RAGAS

Most Popular Open Source

A pioneer in reference-free evaluation. Excellent for initial implementations and cost-conscious deployments.

TruLens

Enterprise-Backed Open Source

Backed by Snowflake, its RAG Triad methodology and strong visualization make it ideal for debugging.

DeepEval

Best for CI/CD Integration

Positioned as “Pytest for LLMs,” it integrates seamlessly into development workflows.

Arize Phoenix

Best for Multimodal

Offers superior support for evaluating RAG systems that work with images, documents, and mixed-media.

Tier 1: Enterprise-Grade Commercial Platforms

- Galileo AI (Highest Enterprise Adoption): Purpose-built for enterprise needs, Galileo offers proprietary metrics that demonstrate a 1.65x higher accuracy than baseline methods. It’s the top choice for large organizations requiring robust production monitoring, regulatory compliance, and dedicated support.

- LangSmith (Best Comprehensive Platform): For organizations heavily invested in the LangChain ecosystem, LangSmith provides an end-to-end platform for evaluation, experiment tracking, and production monitoring.

Tier 2: Open-Source Leaders

- RAGAS (Most Popular Open Source): A pioneer in reference-free evaluation, RAGAS is excellent for initial implementations and cost-conscious deployments. Its core metrics—Faithfulness, Answer Relevancy, Context Precision, and Context Recall—have become industry standards.

- TruLens (Enterprise-Backed Open Source): With backing from Snowflake, TruLens offers enterprise credibility. Its RAG Triad methodology (Context Relevance, Groundedness, Answer Relevance) and strong visualization capabilities make it ideal for debugging.

Tier 3: Specialized Solutions for Development Teams

- DeepEval (Best for CI/CD Integration): Positioned as “Pytest for LLMs,” DeepEval integrates seamlessly into development workflows, offering over 14 metrics and synthetic data generation.

- Arize Phoenix (Best for Multimodal): For organizations working with images, documents, and other mixed media, Phoenix offers superior multimodal RAG support and an excellent debugging interface.

Essential RAG Evaluation Metrics Explained

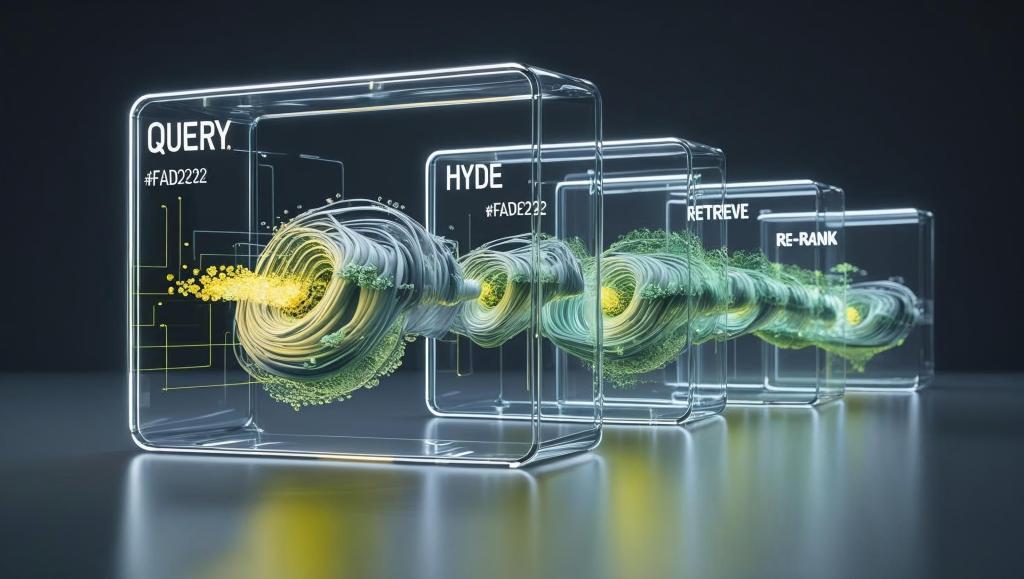

Effective RAG evaluation hinges on understanding three critical relationships: query-to-context, context-to-response, and query-to-response.

The RAG Triad: Core Quality Measurements

Essential RAG Evaluation Metrics: The RAG Triad

Context Relevance

Enterprise Target: >70%

Measures if retrieved documents are relevant to the user’s query. Irrelevant context is the primary cause of off-topic AI responses.

Faithfulness / Groundedness

Enterprise Target: >90%

Measures if the AI’s response is factually supported by the retrieved context. This is your primary defense against hallucinations.

Answer Relevance

Enterprise Target: >85%

Measures if the final answer directly addresses the user’s original question and intent. Critical for user satisfaction.

- Context Relevance (Query ↔ Retrieved Context): Measures if the retrieved documents are relevant to the user’s query. Irrelevant context is the primary cause of off-topic AI responses.

- Technical Detail: This is typically calculated using an LLM-as-a-judge that analyzes the semantic similarity between the user’s query and each retrieved text chunk. The final score is an average of the relevance scores of all retrieved contexts.

- Enterprise Target: >70%.

- Faithfulness/Groundedness (Context ↔ Response): Measures if the AI’s response is factually supported by the retrieved context. This is your primary defense against hallucinations that can damage business credibility.

- Technical Detail: The generated response is first broken down into individual statements. Each statement is then cross-referenced against the provided context to verify its factual accuracy. The faithfulness score is the ratio of factually correct statements to the total number of statements.

- Enterprise Target: >90% for high-stakes applications.

- Answer Relevance (Query ↔ Response): Measures if the final answer directly addresses the user’s original question and intent. This is critical for user satisfaction in customer-facing applications.

- Technical Detail: This metric uses an LLM to assess the semantic alignment between the user’s query and the generated answer. It focuses on usefulness and directness, penalizing answers that are factually correct but fail to address the core question.

- Enterprise Target: >85%.

Advanced Retrieval Metrics

- Context Precision: Measures if the most relevant documents are ranked highest in the retrieval results. It’s a classic information retrieval metric adapted for RAG, focusing on the quality of the top-ranked retrieved items.

- Technical Detail: This metric evaluates the ranking order by assessing if the ground-truth relevant items are present in the top-k retrieved contexts. It is calculated as

true positives / (true positives + false positives)within the top-k results. - Why It Matters: Crucial for ensuring the AI focuses on the most important information first, especially when the context window is limited.

- Technical Detail: This metric evaluates the ranking order by assessing if the ground-truth relevant items are present in the top-k retrieved contexts. It is calculated as

- Context Recall: Measures if the retrieved context contains all the necessary information to answer the query completely. It prioritizes comprehensiveness over the ranking order.

- Technical Detail: This metric compares the ground-truth statements required to answer a question against the statements present in the retrieved context. It is calculated as

true positives / (true positives + false negatives). - Why It Matters: Essential for applications where missing information is costly, such as legal research or compliance verification.

- Technical Detail: This metric compares the ground-truth statements required to answer a question against the statements present in the retrieved context. It is calculated as

Advanced Retrieval Metrics

Context Precision

Measures if relevant documents rank higher than irrelevant ones.

Business Impact: Ensures the AI focuses on the most important information first, improving the quality of generated answers when context is limited.

Context Recall

Measures if the retrieved context contains all necessary information.

Business Impact: Prevents missing critical details in responses, which is essential for completeness-critical tasks like legal research or compliance checks.

Mean Reciprocal Rank (MRR)

Measures how quickly the system finds the first relevant document.

Business Impact: Critical for real-time question-answering systems where speed and immediate results directly affect user satisfaction.

Normalized Discounted Cumulative Gain (NDCG)

Accounts for graded relevance (some documents are more useful than others).

Business Impact: Best for complex search scenarios, rewarding systems that place the *most* relevant documents at the very top of the results.

- Mean Reciprocal Rank (MRR): Measures how quickly the system finds the first relevant document in a ranked list of results.

- Technical Detail: Calculated as the multiplicative inverse of the rank of the first correct answer (e.g., 1 if the first document is relevant, 1/2 if the second is, etc.), averaged across a set of queries.

- Why It Matters: Critical for real-time question-answering systems where the speed of finding the first useful piece of information is paramount.

- Normalized Discounted Cumulative Gain (NDCG): A sophisticated metric that accounts for graded relevance (i.e., some documents are more useful than others) and the position of those documents in the search results.

- Technical Detail: NDCG assigns a relevance score to each document and applies a logarithmic discount based on its rank. This rewards systems that place highly relevant documents at the very top of the results. The score is then normalized for fair comparison across different queries.

- Why It Matters: Best for complex search scenarios where a simple relevant/not-relevant binary is insufficient.

Production-Ready Evaluation Strategies

Automated vs. Human Evaluation

- LLM-as-Judge: This automated approach uses a powerful LLM to evaluate a RAG system’s output based on a detailed scoring rubric. It’s scalable and cost-effective but may lack nuanced judgment on highly specialized topics.

- Human Evaluation: Domain experts manually assess outputs for accuracy, completeness, and tone. While less scalable, it provides the highest quality of evaluation and is essential for validating automated systems.

Benchmarking Datasets and Methodologies

The industry is moving beyond academic datasets to benchmarks that reflect real-world enterprise complexity.

- RAGBench (Industry Gold Standard): The first comprehensive benchmark built on real industry documents, providing a true measure of production performance.

- CRAG (Meta’s Comprehensive Benchmark): A powerful benchmark that tests a system’s ability to handle dynamic, time-sensitive information and structured data.

- Domain-Specific Benchmarks: Datasets like LegalBench-RAG and MIRAGE (Medical) provide specialized evaluation for regulated industries.

Implementation Roadmap and Best Practices

Moving from theory to production requires a phased approach.

Production Monitoring Architecture

Your monitoring stack must track three layers of metrics in real-time:

- System Performance: Latency, throughput, error rates.

- RAG-Specific Metrics: Retrieval accuracy, generation quality, hallucination rates.

- Business Metrics: User satisfaction, task completion rates, cost per query.

Integrate this data with your existing SIEM and APM tools (e.g., Datadog, New Relic) and establish automated alerting for performance degradation.

Implementation Phases

- Phase 1: Foundation (Weeks 1-4): Set up infrastructure, data pipelines, and a baseline evaluation framework (e.g., RAGAS) with a “golden dataset” of at least 100 high-quality, expert-validated QA pairs.

- Phase 2: Production Deployment (Weeks 5-8): Implement advanced features like reranking and guardrails. Deploy a comprehensive observability stack with real-time quality checks.

- Phase 3: Optimization (Weeks 9-12): Analyze real usage patterns to tune performance. Establish a continuous improvement loop by feeding production data and user feedback back into your evaluation datasets.

Production Implementation Roadmap

Phase 1: Foundation (Weeks 1-4)

Set up infrastructure, data pipelines, and a baseline evaluation framework with a “golden dataset” of 100+ expert-validated QA pairs.

Phase 2: Production Deployment (Weeks 5-8)

Implement advanced features like reranking and guardrails. Deploy a comprehensive observability stack with real-time quality checks.

Phase 3: Optimization (Weeks 9-12)

Analyze real usage patterns to tune performance. Establish a continuous improvement loop by feeding production data back into your evaluation datasets.

Future Trends and Strategic Recommendations

The RAG evaluation landscape is evolving rapidly. Key trends for 2025 include the integration of GraphRAG for knowledge graph-based retrieval, the rise of multi-agent evaluation frameworks, and the standardization of metrics across enterprise platforms.

Strategic Recommendations for Enterprise Success:

- Establish a Performance Baseline Now: You cannot improve what you do not measure. Implement a framework like RAGAS immediately to understand your current system’s performance.

- Adopt a Multi-Framework Approach: Combine scalable automated evaluation (LLM-as-Judge) with targeted human review (domain experts) for comprehensive quality assurance.

- Make Evaluation a Core Part of Your MLOps Cycle: Integrate RAG benchmarking into your CI/CD pipelines to catch regressions before they reach production.

- Foster a Culture of Quality: Ensure cross-functional collaboration between data scientists, engineers, and business domain experts in the design and review of your evaluation framework.

The organizations that invest in comprehensive evaluation today will build the reliable, trustworthy AI applications that win tomorrow. Success requires embedding a quality-first mindset across the entire AI development lifecycle.