As a mentor to AI startup founders through programs at Stanford—including the Stanford LISA, and the SEED program—I often encounter a recurring pattern. A founder, brilliant and driven, will lay out a visionary technical roadmap but classify ethics as a “Day 100” problem, a compliance hurdle to be cleared later.

My response is always the same: you’re thinking about it backwards. Responsible AI isn’t a brake; it’s a steering wheel. It’s the core of a strategy that builds enduring companies. In a world of increasing regulatory scrutiny and consumer skepticism, building ethically is no longer just the right thing to do; it is the only way to secure a lasting competitive advantage. It builds profound customer trust, mitigates catastrophic reputational and legal risks, and is a powerful differentiator for attracting top-tier investors and talent.

This guide is the first of a four-part series designed to be the playbook I share with the founders I mentor. We will move beyond abstract theory to provide a concrete roadmap for embedding ethics into your startup’s DNA. This first article lays the essential foundation: the 10 core principles that must guide your AI product design.

Responsible AI: Your Steering Wheel

Ethical AI is the core of a strategy that builds trust, mitigates risk, and attracts premier investors and talent.

of CEOs

agree ethical AI is a competitive advantage.

Source: PwC, 2023 AI Business Survey

of AI Systems

in use exhibit some form of bias, a near-certain risk.

Source: World Economic Forum, 2023

of Consumers

are more loyal to companies perceived as ethical.

Source: IBM Institute for Business Value, 2022

The 10 Core Principles of Ethical AI Product Design

A set of principles is not a mere checklist to be ticked off; it is a North Star for your product development. These 10 principles represent a synthesis of leading global frameworks, including the influential OECD AI Principles and Australia’s AI Ethics Principles, and reflect a broad consensus among industry experts and civil society. For an AI startup, they are the foundational pillars upon which you build a product that users will trust, regulators will respect, and the market will ultimately reward.

The 10 Core Principles of Ethical Product Design

These principles are the pillars upon which you build a product that users trust, regulators respect, and the market rewards.

Human Well-being

Fairness & Bias

Transparency

Privacy & Dignity

Reliability & Safety

Accountability

Human Oversight

Inclusivity

Sustainability

Lawfulness

- Human Well-being & Societal Benefit. This foundational principle mandates that AI systems should be designed to produce beneficial outcomes for people and the planet. It pushes founders to ask a critical question beyond the immediate business case: “How does this product augment human capabilities, enhance creativity, and contribute to positive societal goals?”. This includes proactively designing for the inclusion of underrepresented populations and considering the broader impact on global challenges, such as those outlined in the UN’s Sustainable Development Goals. It also extends to the often-overlooked environmental footprint of training and running large-scale AI models.

- Fairness & Bias Mitigation. Fairness is a proactive commitment to building for equity, not just a reactive compliance with anti-discrimination laws. AI systems are products of their training data; if that data reflects historical societal biases related to race, gender, or socioeconomic status, the AI will not only replicate but amplify those biases. With studies showing that a high percentage of machine learning systems exhibit some form of bias, this is not a hypothetical risk but a near certainty without deliberate intervention.

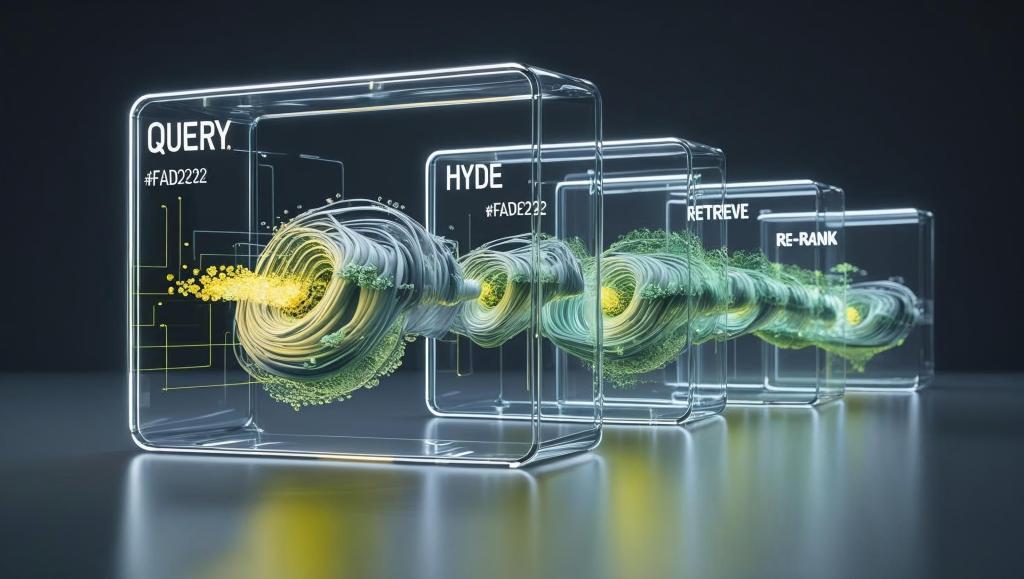

- Transparency & Explainability. To build trust, you must demystify the “black box.” Users, regulators, and even your own internal teams need to understand, at an appropriate level, how an AI system functions and why it arrives at specific decisions. This is not about exposing proprietary algorithms but about providing “plain and easy-to-understand information” regarding the system’s data sources, core logic, capabilities, and limitations. This transparency is the bedrock of user confidence.

- Privacy & Data Dignity. In an era where AI systems process vast quantities of personal data, a robust data governance framework is non-negotiable. This goes beyond mere compliance with regulations like GDPR or CCPA. It requires a fundamental respect for what can be termed “data dignity.” This is achieved by embedding principles like data minimization—collecting only what is absolutely necessary for the AI to function—and providing users with clear, accessible, and meaningful control over their information.

- Reliability, Safety & Security. An AI system must be dependable, operating as intended not only under normal conditions but also during foreseeable misuse or in adverse situations. Reliability ensures consistent predictions. Safety involves engineering the system to prevent harm. Security demands protecting the system and its data from malicious actors, including sophisticated “adversarial attacks” where inputs are crafted to trick a model into producing dangerous outputs.

- Accountability & Traceability. Ultimately, a human or a designated group must be responsible for the AI’s outcomes. For a startup founder, this means establishing clear lines of ownership from day one. True accountability is impossible without traceability—the ability to maintain an auditable record of the datasets, processes, and key decisions made throughout the entire AI system lifecycle.

- Human Agency & Oversight. The goal of AI should be to augment human intelligence, not to supplant it. It is critical to design systems that preserve human agency and allow for meaningful oversight. For high-risk applications, this means building in explicit mechanisms for human intervention—the capacity for a person to question, override, or safely decommission an AI system.

- Inclusivity & Accessibility. This principle ensures that AI systems are designed to be user-centric and accessible to everyone, with special consideration for vulnerable and underrepresented groups who are often most at risk of being marginalized by new technologies. Achieving inclusivity is not an accident; it requires intentional and early consultation with a diverse range of stakeholders.

- Sustainability. Often overlooked by early-stage ventures, sustainability is a critical long-term principle. This has two dimensions: environmental and societal. Environmental sustainability involves being mindful of the significant energy consumption of large-scale models. Societal sustainability requires considering the long-term impacts on the labor market and economic equality.

- Lawfulness & Regulatory Foresight. At a minimum, AI systems must comply with all applicable laws. For a startup, however, the regulatory landscape is evolving at a breakneck pace. True strategic advantage comes from regulatory foresight—building a product that not only meets today’s legal standards but is architected to be adaptable to the stricter requirements of tomorrow.

From Principles to Action: A Founder’s Self-Assessment

A founder’s job is not simply to adopt these principles but to establish a process for consciously navigating the inherent trade-offs between them (e.g., transparency vs. security, fairness vs. privacy). To help translate these ideas into concrete action, this matrix reframes each principle as a core question linked to an actionable startup strategy.

| Principle | Core Question for Founders | Actionable Startup Strategy |

|---|---|---|

| Human Well-being | Does our product genuinely improve lives, or does it create unintended negative consequences? | Define and track non-financial “Well-being KPIs.” Conduct a pre-mortem exercise to brainstorm potential societal harms before launch. |

| Fairness & Bias Mitigation | Could our AI perform differently for different demographic groups? Who might be systematically disadvantaged? | Implement “fairness-aware” machine learning techniques. Conduct regular bias audits on data and models. Ensure your development team is diverse. |

| Transparency & Explainability | Can a non-technical user understand what our AI does, its limitations, and the logic behind its key outputs? | Develop a public-facing “AI Explainer” document. Implement tools like Model Cards to document model details for technical stakeholders. |

| Privacy & Data Dignity | Are we collecting the absolute minimum data necessary? Do users have clear, simple controls over their information? | Adopt a “privacy by design” approach. Make data minimization a core engineering constraint. Build a user-facing dashboard for data management. |

| Reliability, Safety & Security | How could our system fail or be maliciously attacked? What is our plan for when it does? | Conduct adversarial attack simulations on your own models. Define clear operational parameters and create a documented incident response plan. |

| Accountability & Traceability | Who in our company is ultimately accountable for this AI’s output? Can we trace a harmful decision back to its root cause? | Designate an accountable officer (e.g., CEO/CTO). Use version control for models and datasets to ensure full traceability. |

| Human Agency & Oversight | Where is a human “in the loop”? For high-stakes decisions, is there a clear path for human intervention or override? | Map all decision points and classify their risk level. Mandate human-in-the-loop review for all high-risk decisions. |

| Inclusivity & Accessibility | Have we designed and tested our product with input from people with diverse abilities, backgrounds, and experiences? | Conduct user testing with participants from intentionally diverse and underrepresented groups. Adhere to Web Content Accessibility Guidelines (WCAG). |

| Sustainability | What is the environmental cost of our model? What is the long-term impact on the job market and economic equality? | Measure and report on the energy consumption of model training. Author a “Societal Impact Statement” considering long-term effects. |

| Lawfulness & Regulatory Foresight | Does our system comply with current regulations? How are we preparing for future, stricter regulations? | Appoint a team member to track global AI policy developments. Build modular systems that can be easily adapted to new requirements. |

Founder FAQ: Quick Answers on Ethical AI

This section directly answers common questions I hear from founders during mentorship sessions.

The Startup Maturity Curve: From Risk to Resilience

Typical Startup Risk Profile

As a startup matures in its ethical AI practices, its risk profile dramatically improves, signaling higher value to savvy investors.

Where to Focus First

Bias is a product failure. The first and most critical step for any founder is to interrogate the training data, the primary source of unfair outcomes.

- Q: Why is ethical AI important for a startup’s valuation? A: Investors are increasingly sophisticated about risk. A startup that ignores AI ethics is building on a foundation of unmanaged risk—legal, reputational, and technical. Demonstrating a robust ethical framework signals maturity, reduces perceived risk, and can lead to a higher valuation by building a more resilient, trustworthy company.

- Q: What is the very first step to mitigate bias in an AI model? A: The first step happens before you even write code: interrogate your data. Analyze your training dataset for demographic imbalances and historical biases. If your data is skewed, your model will be too. A thorough data audit is the most critical first step in any serious bias mitigation strategy.

- Q: How can a small startup handle AI governance without a big team? A: Start lean. Your initial “governance” can be a recurring 1-hour meeting with the founding team. Use the self-assessment matrix in this article as your agenda. The goal isn’t bureaucracy; it’s about creating a dedicated time to ask these hard questions and documenting your decisions. This builds the discipline of responsible development from Day 1.

What’s Next

These ten principles are your company’s ethical constitution. But a constitution is only as powerful as the institutions built to uphold it. Simply having principles on a webpage is not enough.

In the next article in this series, we will move from the “what” to the “how.” Part 2: The Governance Playbook will provide a lean, actionable framework for building a Responsible AI Council and developing the core policies your startup needs to translate these principles into practice, day in and day out.