The era of generative AI as a speculative novelty is over. With private investment in generative AI soaring to nearly $34 billion in 2024, the mandate is clear: move beyond clever chatbots and deliver tangible ROI by automating complex, mission-critical workflows. Yet, as organizations rush to deploy these powerful models, they are colliding with a formidable barrier: the trust deficit.

The very “creativity” that makes Large Language Models (LLMs) so impressive is also their greatest liability in an enterprise context. Their propensity to “hallucinate”—to generate plausible but factually incorrect information—is not a fringe bug; it is a fundamental characteristic. With many organizations reporting negative consequences from AI inaccuracies, the path to production is no longer about what a model can do, but what it can be trusted to do.

This imperative has led to the rise of Retrieval-Augmented Generation (RAG) as the dominant architecture for enterprise AI. The concept is simple and powerful: ground the LLM’s response in a corpus of verifiable, private data, turning a creative storyteller into a knowledgeable expert. However, the initial, “naive” implementation of RAG is proving to be a fragile foundation. Simply performing a vector search and stuffing the results into a prompt is like giving a brilliant student a disorganized, incomplete pile of notes moments before an exam. The potential is there, but the performance is unreliable.

To truly unlock the transformative power of generative AI, we must move beyond this naive approach. We must become engineers of trust, architecting sophisticated, multi-stage RAG pipelines that are resilient, accurate, and reliable by design. This is the RAG Renaissance, and it is less about model training and more about rigorous information science.

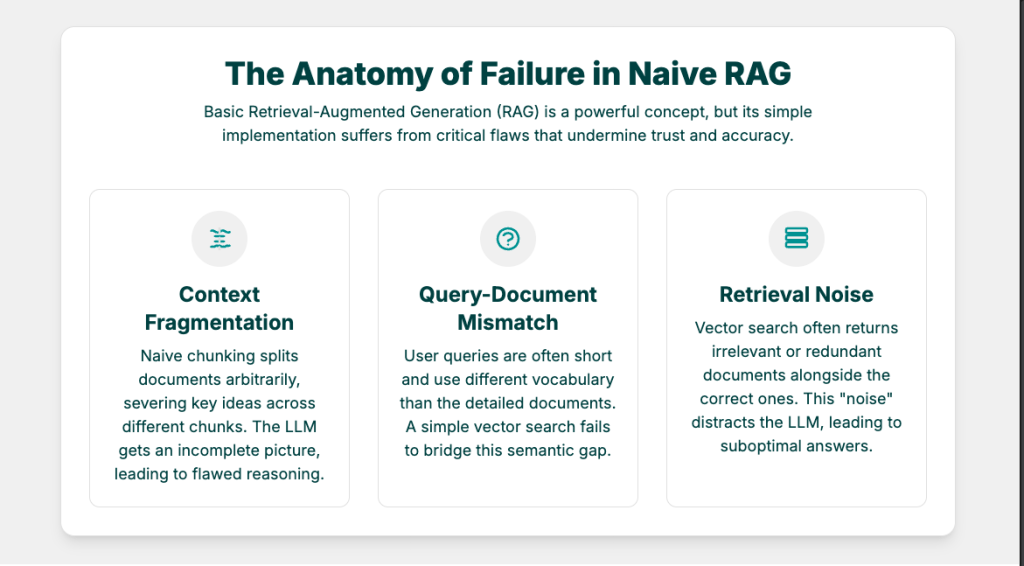

The Anatomy of Failure in Naive RAG

To build a better system, we must first understand the precise failure modes of a simple RAG pipeline. They fall into three main categories:

- Context Fragmentation: Naive chunking strategies split documents at arbitrary points. A single, crucial idea can be severed across two chunks, leaving the LLM with an incomplete picture. The model receives a sentence fragment or a paragraph that references a table in the next chunk, which it never sees.

- Query-Document Mismatch: The language of a user’s query is often profoundly different from the language of the knowledge base. A user asks, “Is our new product line profitable?” while the financial report talks about “Q3 revenue streams for the ‘Odyssey’ product family exceeding operational expenditure.” A simple vector search, looking for semantic overlap, can easily miss the connection.

- Retrieval Noise: Vector search is optimized for speed and recall, not precision. It dutifully returns the top

kresults, but this list is often noisy, containing redundant information or passages that are only tangentially related to the query. This “noise” can distract the LLM, causing it to focus on the wrong information and deliver a suboptimal, or even incorrect, answer.

A production-grade RAG system is one that systematically addresses each of these failure points through a sophisticated, multi-stage pipeline.

Blueprint for a Trustworthy RAG Engine

A modern RAG architecture is a sequence of quality-control gates, ensuring that only the most relevant, context-rich, and precise information reaches the LLM for synthesis. It involves advanced techniques at both the pre-retrieval and post-retrieval stages.

1. Pre-Retrieval: Sharpening the Search

Before we even search for an answer, we must optimize how we store our knowledge and how we ask our questions.

- Sentence-Window Retrieval: To solve context fragmentation, we move away from chunking large paragraphs. Instead, we embed individual, highly-specific sentences. This allows for extremely precise matching. However, when a sentence is retrieved, we don’t just pass that sentence to the LLM. We retrieve its “window”—the one or two sentences before and after it. This provides the crucial local context (pronouns, acronyms, relationships) that the LLM needs to make sense of the retrieved fact, giving us the best of both worlds: high-precision search and high-quality context.

- Hypothetical Document Embeddings (HyDE): To bridge the query-document mismatch, we use an LLM to help us ask a better question. Instead of directly embedding the user’s terse query, we first pass it to an LLM with a simple instruction: “Generate a detailed, hypothetical answer to this question.” The resulting text is a rich, descriptive paragraph that is far more structurally similar to the documents in our knowledge base. We then embed this hypothetical answer and use it for the vector search. This technique acts as a universal translator, converting user intent into the language of the document corpus, dramatically improving retrieval relevance.

2. Post-Retrieval: Curating the Evidence

Once we have an initial set of candidate documents, the work is not done. We must now act as critical curators, ensuring only the highest-quality evidence is presented to our final “reasoning engine”—the LLM.

- Cross-Encoder Re-ranking: This is perhaps the most critical step for achieving state-of-the-art performance. A standard vector search (using a bi-encoder) is fast because it creates embeddings for the query and documents independently. A cross-encoder, while slower, is far more powerful. It examines the query and a potential document together, allowing it to capture fine-grained interactions and nuances.The optimal strategy is a two-stage process. First, use the fast bi-encoder to retrieve a broad set of candidate documents (e.g., the top 50). Then, use a more precise cross-encoder to re-rank this smaller set. This is analogous to a legal team first gathering all possible evidence for a case, and then carefully selecting and ordering the most compelling pieces to present to the jury. This ensures the LLM’s final prompt is populated with the most relevant, non-redundant information, minimizing distraction and maximizing accuracy.

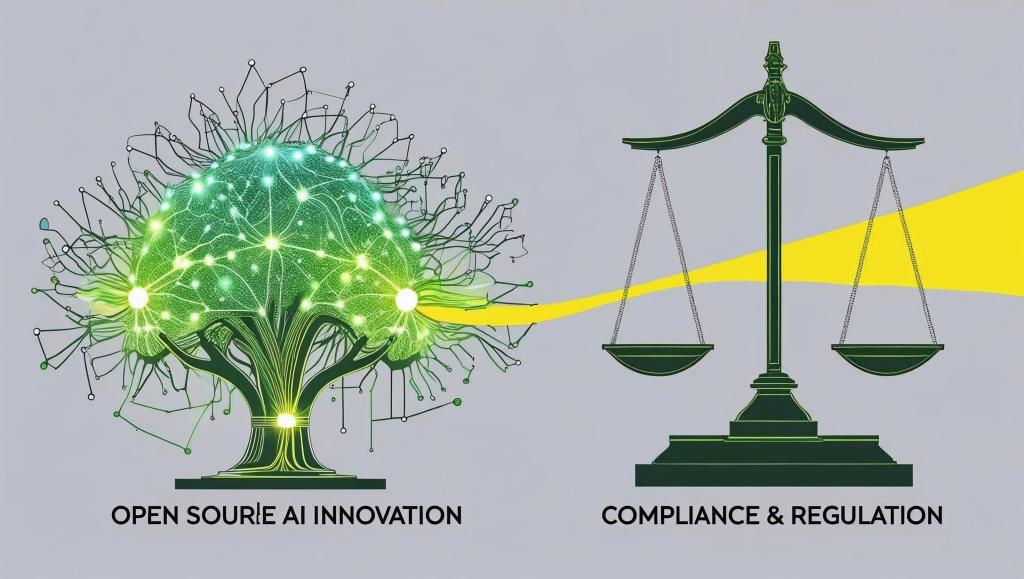

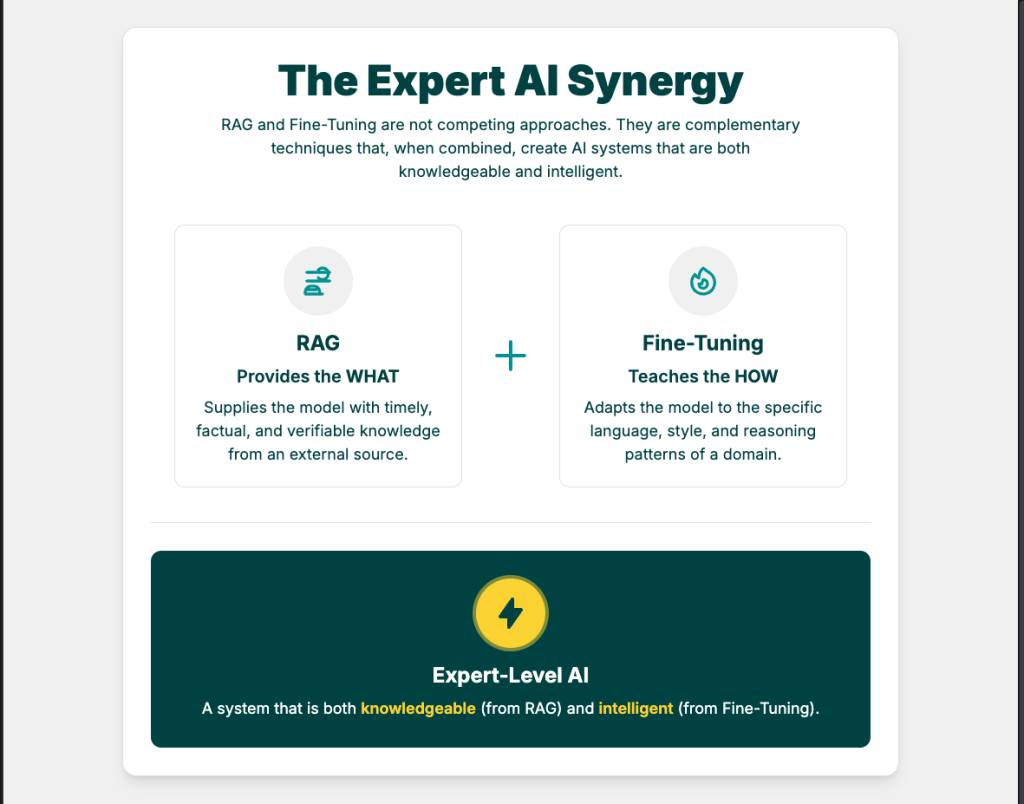

The Next Frontier: Combining Knowledge with Intelligence

Building a robust RAG pipeline solves the problem of grounding an LLM in verifiable facts. However, to create a true digital expert, we must address another dimension: the model’s inherent reasoning capability and stylistic fluency within a specific domain. This is where RAG and fine-tuning cease to be competing strategies and become a powerful, synergistic pair.

- RAG provides the what: It is the knowledge librarian, supplying the model with timely, factual, and verifiable information from an external source. Its strength is its dynamism; the knowledge base can be updated easily without retraining the model.

- Fine-tuning provides the how: It is the domain expert tutor, teaching the model the specific language, style, structure, and implicit reasoning patterns of a specialized field like finance or law. Its strength is in improving the model’s ability to interpret and synthesize domain-specific information in a stylistically appropriate manner.

The optimal architecture for many advanced applications, therefore, involves using a fine-tuned model to reason over context provided by a RAG system. This hybrid approach creates an AI that is not only knowledgeable but also intelligent, capable of delivering expert-level analysis that is both factually correct and contextually brilliant.

The ROI of Trust

Building this advanced pipeline is not an academic exercise; it is an investment with a clear and compelling return. By systematically engineering trust into our AI systems, we move from tentative pilots to transformative production deployments.

A system built on these principles doesn’t just provide more accurate answers. It creates a defensible audit trail, citing the precise sources for its conclusions. It reduces the risk of costly legal and reputational damage from AI-driven errors. It unlocks the ability to automate high-stakes, knowledge-intensive workflows in finance, law, and R&D that were previously off-limits.

The conversation around generative AI is maturing. The initial awe at its capabilities is being replaced by a sober assessment of its risks. In this new landscape, the most valuable technical leaders will not be those who can simply connect to an API, but those who can architect systems of trust. The future of enterprise AI will be built not on the raw power of LLMs, but on the intelligent and rigorous engineering of the systems that surround them. The RAG Renaissance is here, and it demands we become architects of reliability.