When executives tell me “principles slow us down,” I ask a simple question: What would change if your build system refused to ship unless trust checks passed—just like security tests? The real bottleneck in enterprise AI isn’t ethics; it’s rework. Most organizations still treat “responsible AI” as an after-the-fact review, leading to costly compliance debt as teams scramble to retrofit safety checks, add manual reviews, and produce audit artifacts that were never captured during development.

In a year when generative AI moved from labs to line-of-business software, this approach is no longer tenable. Fortunately, we have a global north star. In May 2024, the OECD refreshed its landmark AI Principles to address the realities of general-purpose and generative AI, tightening the emphasis on safety, privacy, intellectual property, and information integrity. The question facing leaders isn’t whether to adopt these principles—it’s how to wire them directly into products, processes, and platforms to ship faster and safer.

The Landscape Has Changed: From Theory to Text

The pressure to move from principles on a poster to policies in production is coming from all sides. Regulators have moved from theory to text. The EU AI Act, for instance, is now law and will begin phasing in obligations that will inevitably influence vendor contracts and procurement checklists worldwide.

At the same time, standard-setters are getting specific. In the U.S., the National Institute of Standards and Technology (NIST) released its Generative AI Profile, a companion to its influential AI Risk Management Framework. In practice, it’s a ready-made control catalog that engineering teams can map directly to the OECD’s values-based principles.

Finally, incidents are out in the open. The OECD’s AI Incidents & Hazards Monitor (AIM) now documents cases globally with a standardized methodology, making trends measurable across sectors. This changes the boardroom conversation: risk patterns are observable, peer benchmarks exist, and time-to-mitigation is a performance metric, not a vague promise.

A New Operating Model: From Policy to Product with PRIME

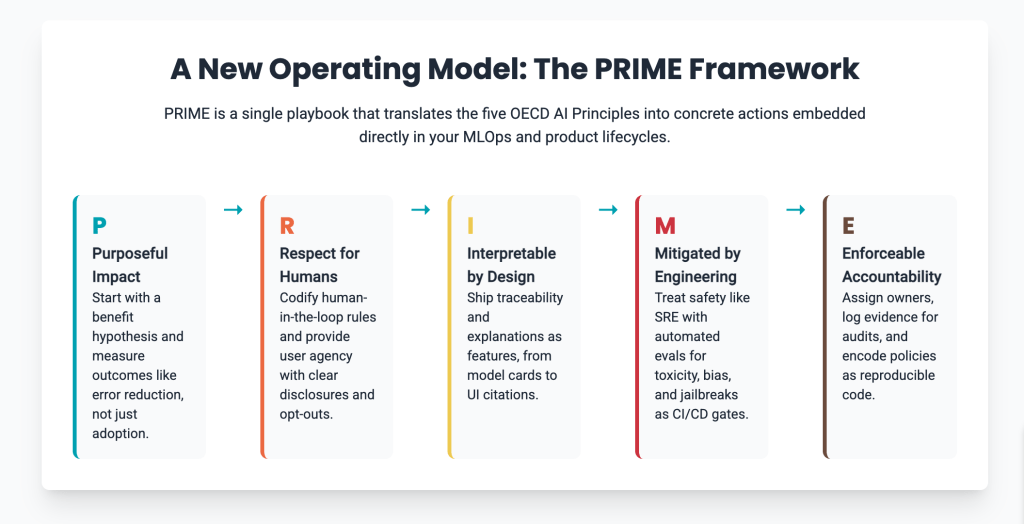

To move from well-meaning principles to hardened production code, leaders need a new operating model—one that translates values directly into engineering requirements. This model, which I call PRIME, embeds the OECD’s five core values directly into the MLOps and product lifecycles. It’s a single playbook that engineers, designers, risk leaders, and procurement can all use.

- P — Purposeful Impact (Inclusive growth and well-being) Start each AI feature with a benefit hypothesis and an affected-groups map. Define who should benefit and how you’ll measure it (e.g., cycle time reduced, accessibility gains, error reduction). Make these outcomes part of the product’s definition of done.

- R — Respect for Humans (Human-centered values and fairness) Codify where humans stay in the loop and why. Pre-register protected contexts (e.g., employment, health, credit) that trigger stricter controls. Document decision rights and provide user agency through clear disclosures, opt-outs, and correction channels.

- I — Interpretable by Design (Transparency and explainability) Ship traceability and explanations as features. Maintain model cards, data sheets, and prompt/version lineage. In the UI, present source citations and confidence levels, tailoring explanations to the audience—from a clinician who needs source spans to a consumer who needs plain-language context.

- M — Mitigated by Engineering (Robustness, security, and safety) Treat safety like SRE. Create automated evaluations for hallucination, toxicity, prompt-injection, and jailbreak resilience. Set release gates (e.g., jailbreak success rate ≤ 1%) and bind kill switches to live thresholds. Test for privacy leakage and license violations as part of CI/CD.

- E — Enforceable Accountability (Accountability) Assign accountable owners and define the evidence you’ll keep: datasets, prompts, model versions, evaluation results, and production logs. Encode policies as code so audits are reproducible. Make vendors deliver evidence artifacts and accept incident-sharing clauses.

What PRIME Looks Like in Your Stack

PRIME isn’t just a checklist; it’s a blueprint for your entire technology stack. Adopting the OECD Principles as your north star, you can map them to NIST’s controls and build a lightweight policy-as-code service to enforce them.

Here is a simplified view of how the framework translates high-level principles into measurable actions across your organization:

| OECD Principle | PRIME Dimension | Key Action in the Stack | Core KPI |

|---|---|---|---|

| Inclusive Growth & Well-being | P – Purposeful Impact | Define benefit hypothesis in Governance Plane | P2P Coverage |

| Human-Centered Values & Fairness | R – Respect for Humans | Codify human-in-the-loop rules in Experience Plane | P2P Coverage |

| Transparency & Explainability | I – Interpretable by Design | Ship model cards & UI explanations from the Data Plane | P2P Coverage |

| Robustness, Security & Safety | M – Mitigated by Engineering | Automate evals as release gates in the Model Plane | Intelligence Velocity |

| Accountability | E – Enforceable Accountability | Log evidence and run audits from the Operations Plane | P2P Coverage |

- Governance Plane: Adopt the OECD Principles and map them to NIST controls. Build a policy-as-code service with machine-checkable rules (e.g., “no deployment without provenance tags”).

- Data Plane: Implement data contracts that carry provenance, license, and consent information. Automate leakage tests and license checks before release.

- Model/Agent Plane: Maintain a catalog of approved base models. Standardize an evaluation harness that runs unit, scenario, and adversarial tests for every release.

- Experience Plane: Make transparency visible but unobtrusive with “Why you’re seeing this” disclosures and easy feedback channels.

- Operations Plane: Stand up an AI incident registry aligned to the OECD AIM taxonomy. Review incidents weekly to extract new adversarial test cases and track mitigation time.

Two KPIs That Make Trust Measurable

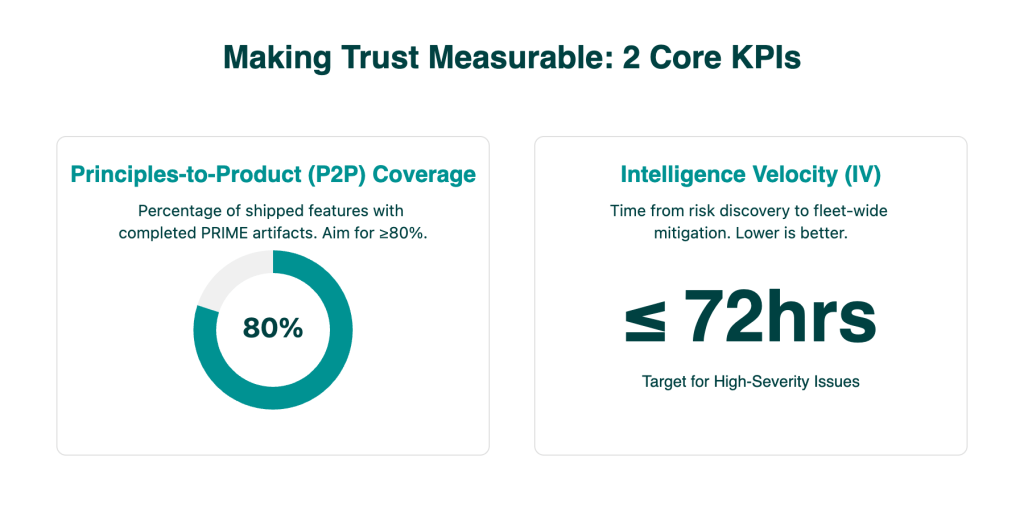

To make this real, you must measure it. These two metrics convert “responsibility” from a narrative into an engineering discipline.

- Intelligence Velocity (IV): The elapsed time from a new risk discovery (e.g., a novel jailbreak vector) to fleet-wide mitigation (guardrails updated, tests added, models redeployed). Lower is better.

- Principles-to-Product Coverage (P2P): The percentage of shipped AI features with completed PRIME artifacts: a benefit hypothesis, fairness/eval report, explanation UX, safety gates, and an audit trail. Aim for ≥80% in your first quarter.

A 90-Day Plan to Start Tomorrow

Month 1 — Baseline and Controls: Pick one revenue-adjacent GenAI use case. Adopt the OECD Principles as your standard and map them to the NIST Generative AI Profile. Create the minimum viable control book for this use case and wire the evidence checks into your CI pipeline.

Month 2 — Instrument and Iterate: Build or extend your evaluation harness for hallucination, toxicity, and jailbreak attempts. Stand up an incident registry mapped to the OECD AIM taxonomy and run a weekly triage where engineering, product, and risk join forces.

Month 3 — Decide to Scale: Track your IV and P2P metrics. If IV is under 72 hours for high-severity issues and P2P is over 80%, expand PRIME to a second use case. Prepare a one-page readiness note for the EU AI Act, noting your risk category hypothesis and evidence you already collect.

The Takeaway

Principles don’t slow you down; rework does. By treating the OECD AI Principles as engineering requirements, you can build trust directly into your development pipelines. Start with one use case this quarter. Make the work visible in your dashboards, measure Intelligence Velocity and P2P Coverage, and give your board a story about AI that’s not just exciting, but dependable.